Environment Optimization for Fostering Agent Collaboration

While traditional research in multi-agent systems focuses on improving agents’ algorithms under fixed environmental settings, our team takes a complementary perspective: We optimize the environment itself to enhance multi-agent performance. By tailoring the environment to align with agents’ capabilities, planning strategies, and data distributions, we can unlock higher levels of coordination and efficiency. Inspired by procedural content generation in video games, our key idea is to treat the multi-agent simulator as a black box, which encapsulates planning, control, and evaluation, and to search for environments that maximize its performance output.

This new direction raises several core research challenges:

- How can we represent and parameterize complex environments?

- How do we incorporate domain-specific constraints into the optimization?

- How do we define objectives when explicit environment preferences are unavailable?

- How do we efficiently search when each evaluation is expensive?

Warehouse Physical Layout Design

Warehouse automation today relies on fleets of robots that autonomously transport inventory pods throughout massive storage facilities. Yet, while planning and coordination algorithms for these robots have seen significant advances, the warehouse environments they operate in remain largely unchanged — still designed around the needs of human workers.

Most layouts feature grid-like arrangements of rectangular shelf clusters and standardized aisle patterns, optimized for human accessibility and pathfinding. However, these patterns are largely irrelevant to robots, which are not constrained by human ergonomics or visual navigation. In fact, such rigid layouts can even impede robotic efficiency and throughput.

To bridge this gap, we developed layout optimization algorithms that searches for high-performing warehouse layouts tailored specifically to the capabilities of robot fleets and their underlying planning stack.

✨ Notable Results:

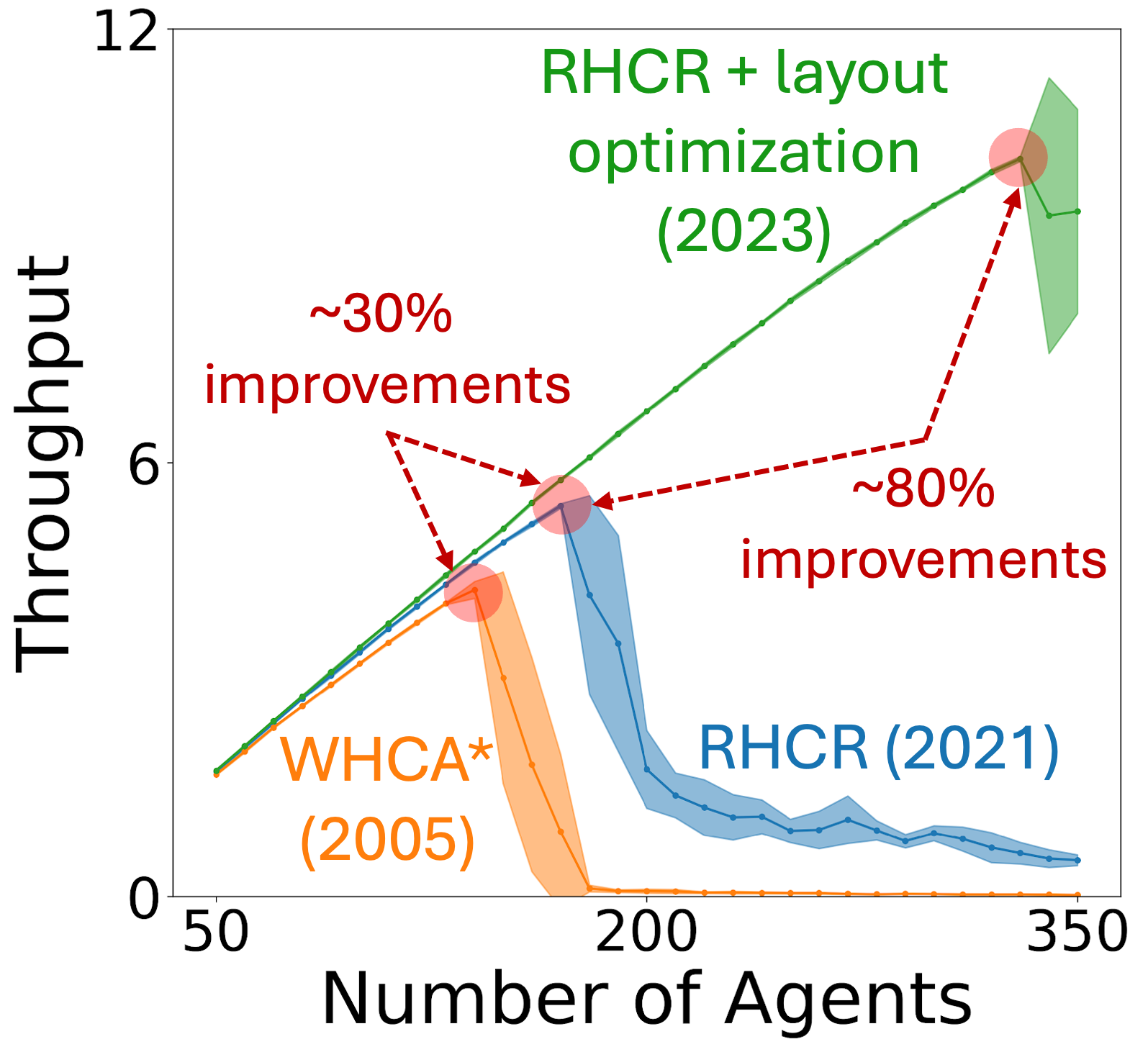

1. Layout optimization outpaces algorithmic progress: While decades of research in MAPF algorithms have brought steady gains in throughput, our results reveal that optimizing the environment can yield substantially greater performance improvements. Specifically, RHCR (a state-of-the-art MAPF solver from 2021) achieves only a 30% throughput increase over WHCA* (a basic solver from 2005). However, when paired with our layout optimization framework, RHCR achieves a remarkable 80% improvement, underscoring the often-overlooked power of environment design in multi-agent systems.

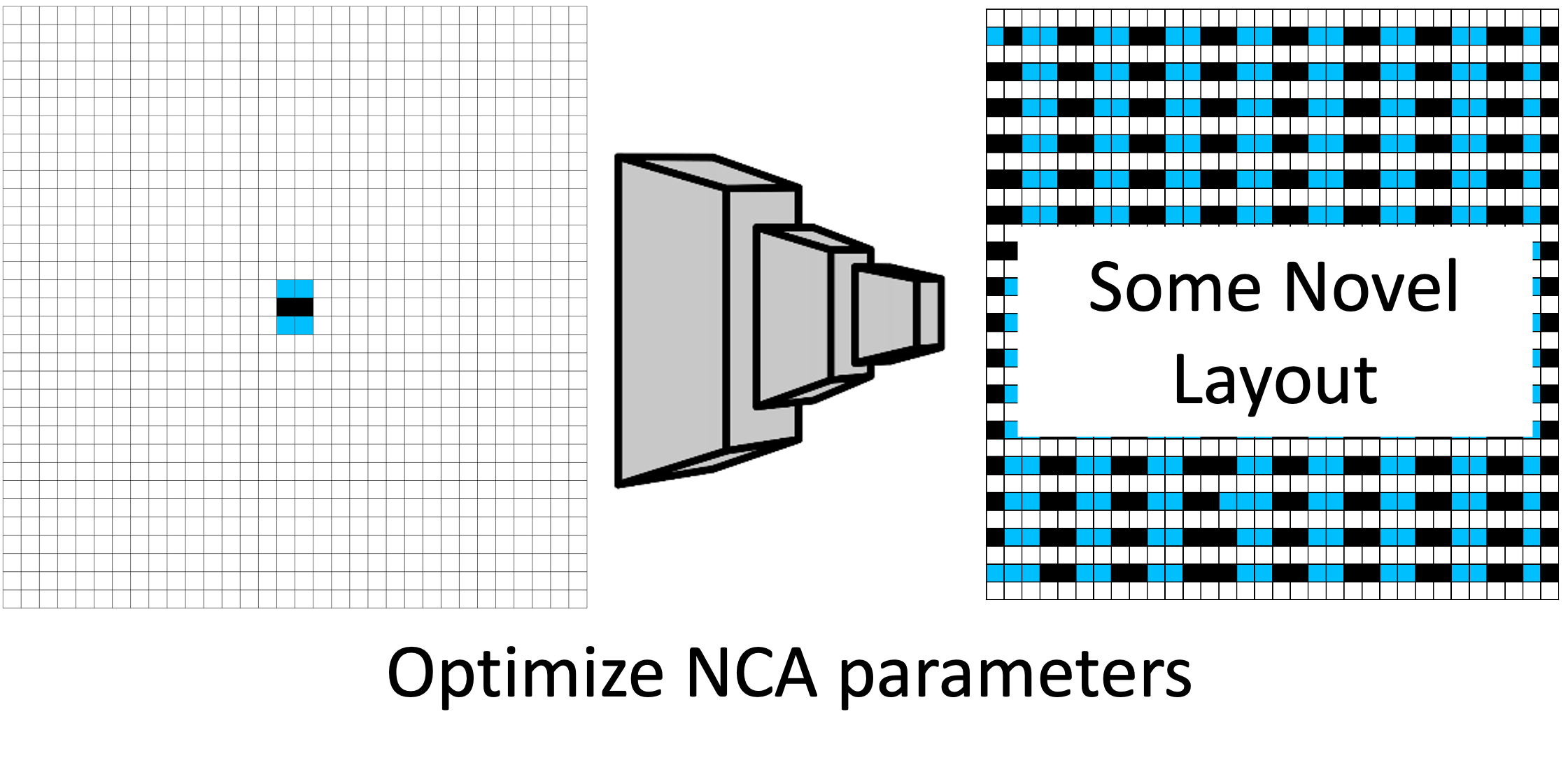

2. Optimizing a layout generator instead of a layout itself: While directly optimizing warehouse layouts can lead to performance gains, it is computationally intensive and often limited to small-scale environments. Moreover, the resulting layouts tend to lack clear structure, making them difficult for humans to interpret or trust. To overcome these limitations, we propose optimizing a layout generator based on Neural Cellular Automata (NCA). This approach offers two key advantages: (1) Scalability: The generator can be trained on small layouts and generalize to large-scale environments with thousands of agents. (2) Interpretability: The generated layouts exhibit clear local patterns, making them easier to analyze and potentially adapt manually.

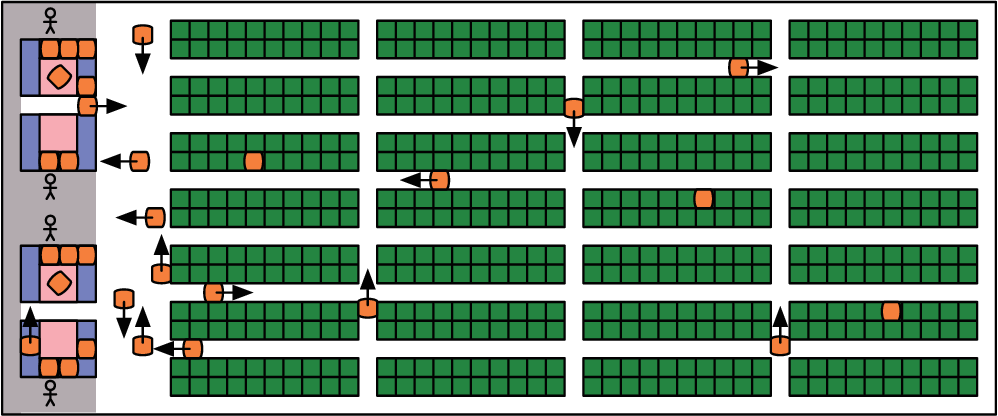

Below shows an example warehouse simulation with 300 robots transporting goods from shelves (black cells) to workstations (pink cells). Since shelves are non-traversable, robots must position themselves at adjacent endpoints (blue cells) to retrieve items. In the traditional layout (a), robots frequently experience congestion and deadlocks. The optimized layout (b) significantly improves flow and reduces congestion. The pattern-aware optimized layout (c) further enhances interpretability by introducing structured local patterns, while maintaining comparable performance.

Relevant publications

Multi-Robot Coordination and Layout Design for Automated Warehousing.

Abstract BibTeX Publisher arXiv Code Slides PosterWith the rapid progress in Multi-Agent Path Finding (MAPF), researchers have studied how MAPF algorithms can be deployed to coordinate hundreds of robots in large automated warehouses. While most works try to improve the throughput of such warehouses by developing better MAPF algorithms, we focus on improving the throughput by optimizing the warehouse layout. We show that, even with state-of-the-art MAPF algorithms, commonly used human-designed layouts can lead to congestion for warehouses with large numbers of robots and thus have limited scalability. We extend existing automatic scenario generation methods to optimize warehouse layouts. Results show that our optimized warehouse layouts (1) reduce traffic congestion and thus improve throughput, (2) improve the scalability of the automated warehouses by doubling the number of robots in some cases, and (3) are capable of generating layouts with user-specified diversity measures.

@inproceedings{ ZhangIJCAI23,

author = "Yulun Zhang and Matthew C. Fontaine and Varun Bhatt and Stefanos Nikolaidis and Jiaoyang Li",

title = "Multi-Robot Coordination and Layout Design for Automated Warehousing",

booktitle = "Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI)",

pages = "5503-5511",

year = "2023",

doi = "10.24963/ijcai.2023/611",

}Arbitrarily Scalable Environment Generators via Neural Cellular Automata.

Abstract BibTeX Publisher arXiv Code Slides Poster TalkWe study the problem of generating arbitrarily large environments to improve the throughput of multi-robot systems. Prior work proposes Quality Diversity (QD) algorithms as an effective method for optimizing the environments of automated warehouses. However, these approaches optimize only relatively small environments, falling short when it comes to replicating real-world warehouse sizes. The challenge arises from the exponential increase in the search space as the environment size increases. Additionally, the previous methods have only been tested with up to 350 robots in simulations, while practical warehouses could host thousands of robots. In this paper, instead of optimizing environments, we propose to optimize Neural Cellular Automata (NCA) environment generators via QD algorithms. We train a collection of NCA generators with QD algorithms in small environments and then generate arbitrarily large environments from the generators at test time. We show that NCA environment generators maintain consistent, regularized patterns regardless of environment size, significantly enhancing the scalability of multi-robot systems in two different domains with up to 2,350 robots. Additionally, we demonstrate that our method scales a single-agent reinforcement learning policy to arbitrarily large environments with similar patterns.

@inproceedings{ ZhangNeurIPS23,

author = "Yulun Zhang and Matthew C. Fontaine and Varun Bhatt and Stefanos Nikolaidis and Jiaoyang Li",

title = "Arbitrarily Scalable Environment Generators via Neural Cellular Automata",

booktitle = "Proceedings of the Conference on Neural Information Processing Systems (NeurIPS)",

pages = "57212-57225",

year = "2023",

doi = "",

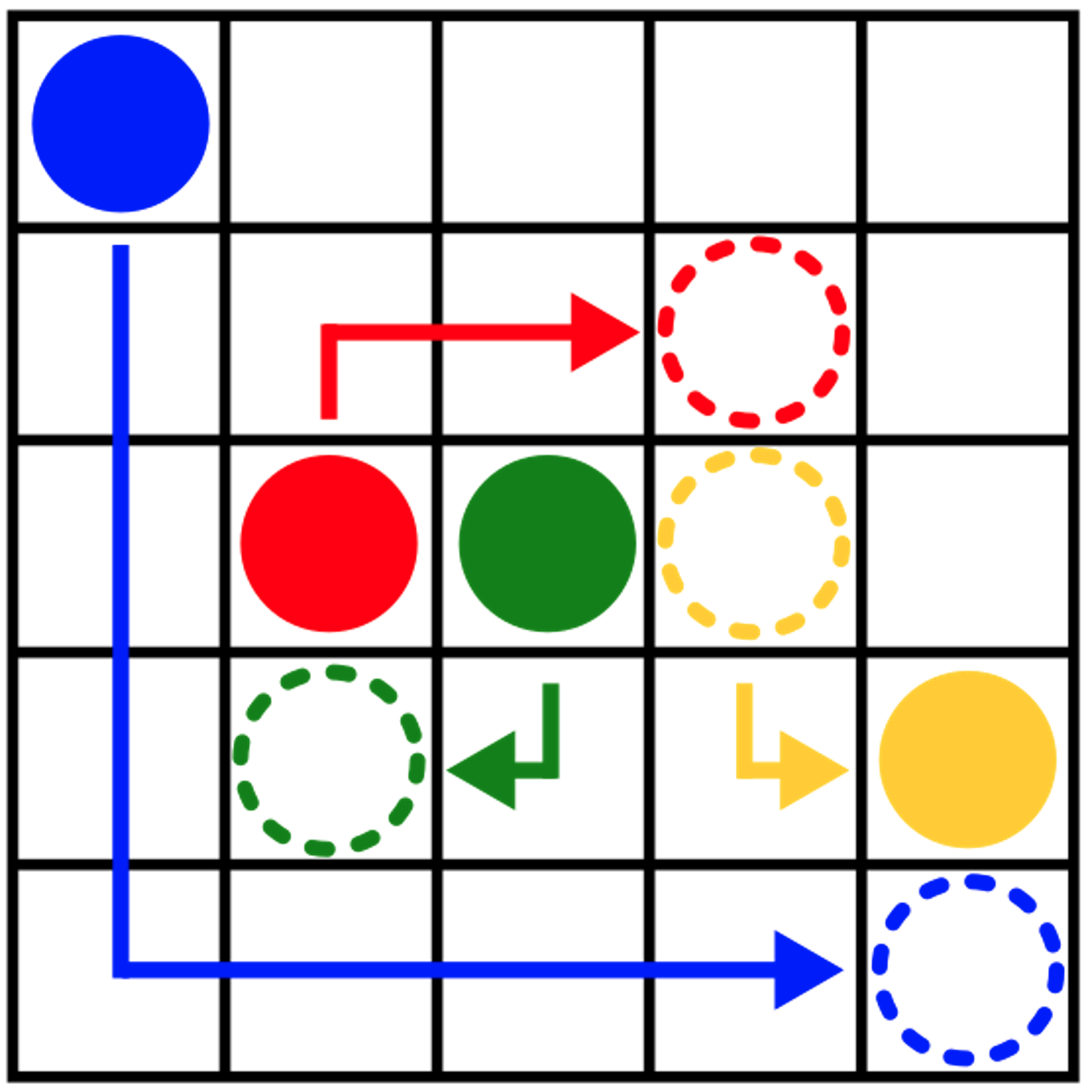

}Virtual Environment Optimization via Guidance Graphs

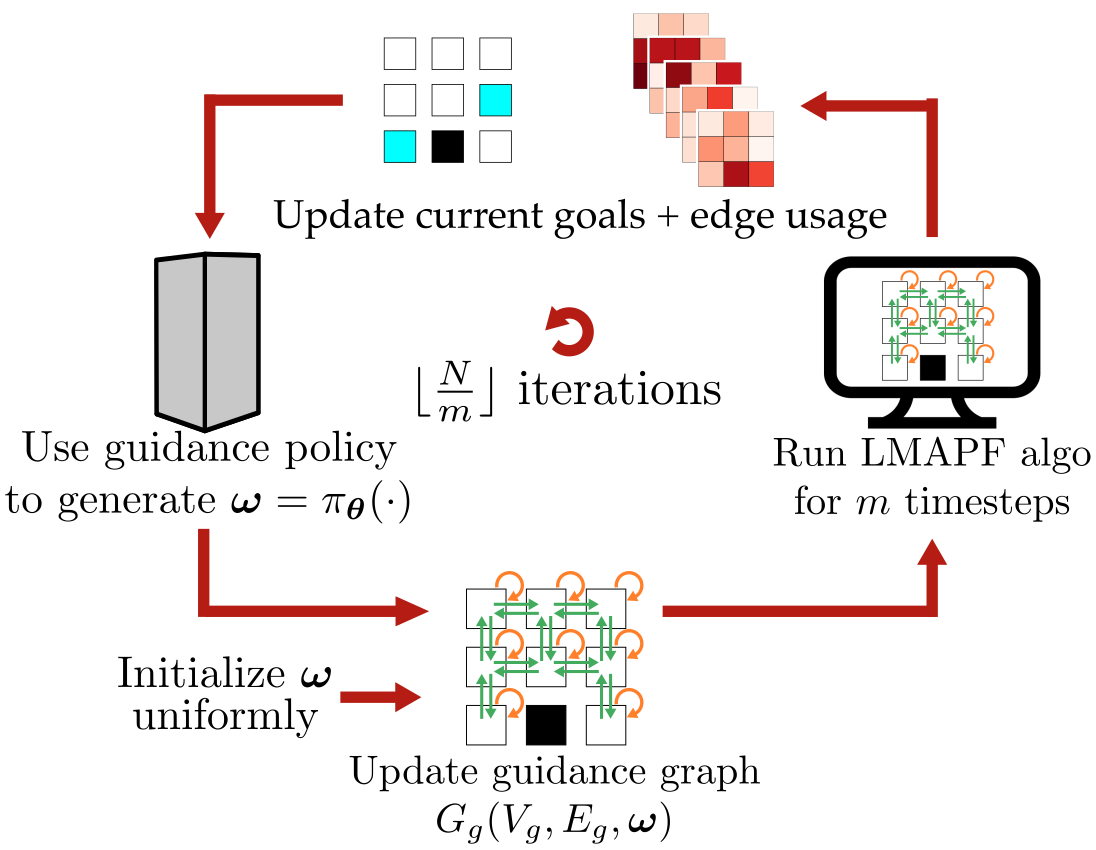

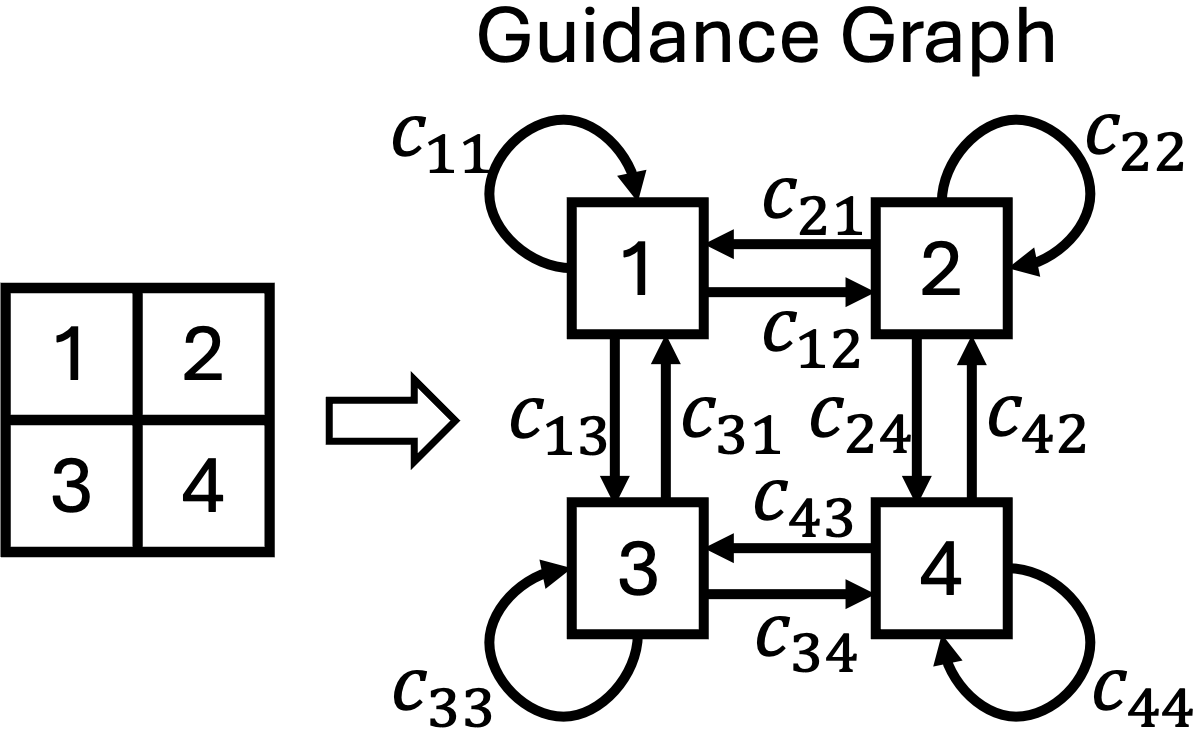

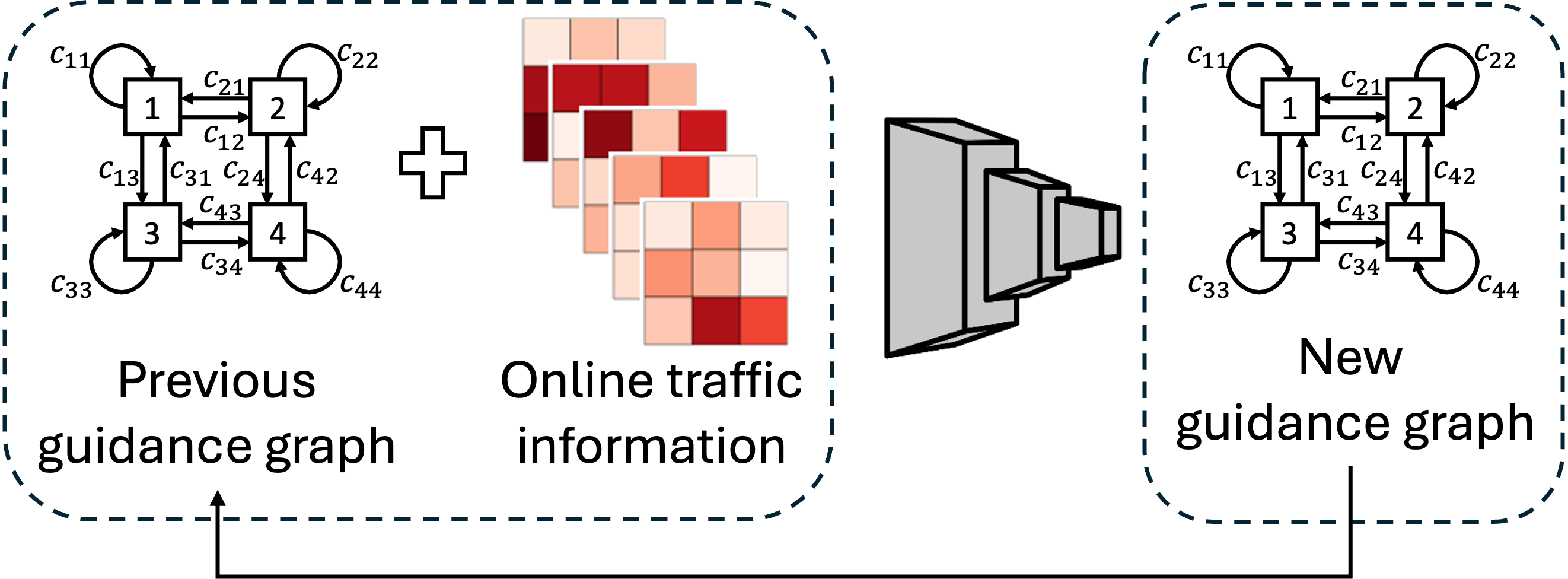

Encouraged by the promising results of physical environment optimization, we extended this idea to multi-agent domains where the environment cannot be physically reconfigured. To this end, we introduced the concept of virtual environment optimization, representing the virtual environment as a guidance graph. A guidance graph preserves represents the traversability of the environment as graph and introduces an edge cost for each possible action an agent can take at each vertex. By optimizing the edge weights, the guidance graph provides high-level navigational cues to agents, helping them move in more structured and cooperative patterns. Even though the physical map remains unchanged, this virtual layer can significantly reduce traffic congestion and improve overall throughput.

✨ Notable Results:

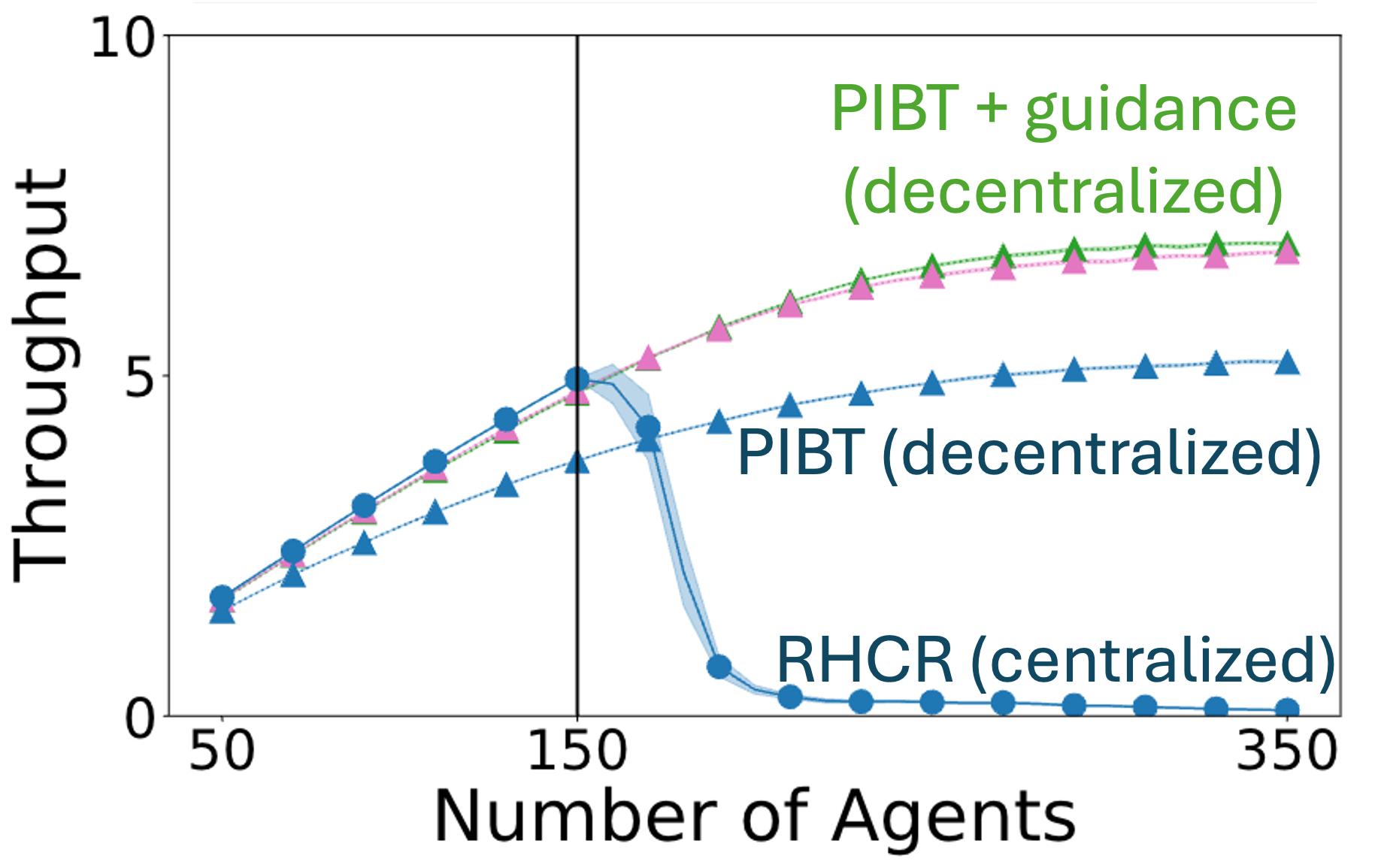

1. Closing the performance gap between centralized and decentralized planning: By overlaying a guidance graph, the decentralized PIBT planner achieved throughput comparable to the centralized RHCR for small numbers of agents and surpassed both in large numbers of agents. Note that the addition of guidance graphs preserves the decentralized nature of PIBT and introduce negligible computational overhead.

2. First place in the League of Robot Runners: We won a MAPF competition sponsored by Amazon Robotics in 2023, which focuses on fast solvers (< 1 sec) for challenging lifelong MAPF problems involving extreme agent density of up to 98%. In addition to the advanced planning algorithms we have developed, the utilization of the guidance graph was instrumental in our victory.

3. Online guidance graph optimization: Instead of optimizing an offline guidance graph that provides static guidance, we propose an online guidance policy that dynamically adapts the guidance graph over time using real-time traffic information. This online framework is particularly well-suited for environments where task distributions evolve, such as warehouses with shifting demand or fleets operating in changing conditions. Empirical results show significant performance gains: (1) Up to 30.75% improvement in throughput over offline (static) guidance methods. (2) Up to 52.42% improvement over existing manually-designed online guidance.

Relevant publications

Online Guidance Graph Optimization for Lifelong Multi-Agent Path Finding.

Abstract BibTeX Publisher arXiv Code Slides Poster TalkWe study the problem of optimizing a guidance policy capable of dynamically guiding the agents for lifelong Multi-Agent Path Finding based on real-time traffic patterns. Multi-Agent Path Finding (MAPF) focuses on moving multiple agents from their starts to goals without collisions. Its lifelong variant, LMAPF, continuously assigns new goals to agents. In this work, we focus on improving the solution quality of PIBT, a state-of-the-art rule-based LMAPF algorithm, by optimizing a policy to generate adaptive guidance. We design two pipelines to incorporate guidance in PIBT in two different ways. We demonstrate the superiority of the optimized policy over both static guidance and human-designed policies. Additionally, we explore scenarios where task distribution changes over time, a challenging yet common situation in real-world applications that is rarely explored in the literature.

@inproceedings{ ZangAAAI25,

author = "Hongzhi Zang and Yulun Zhang and He Jiang and Zhe Chen and Daniel Harabor and Peter J. Stuckey and Jiaoyang Li",

title = "Online Guidance Graph Optimization for Lifelong Multi-Agent Path Finding",

booktitle = "Proceedings of the AAAI Conference on Artificial Intelligence (AAAI)",

pages = "14726-14735",

year = "2025",

doi = "10.1609/aaai.v39i14.33614",

}Deploying Ten Thousand Robots: Scalable Imitation Learning for Lifelong Multi-Agent Path Finding. (Best Paper on Multi-Robot Systems; Best Student Paper)

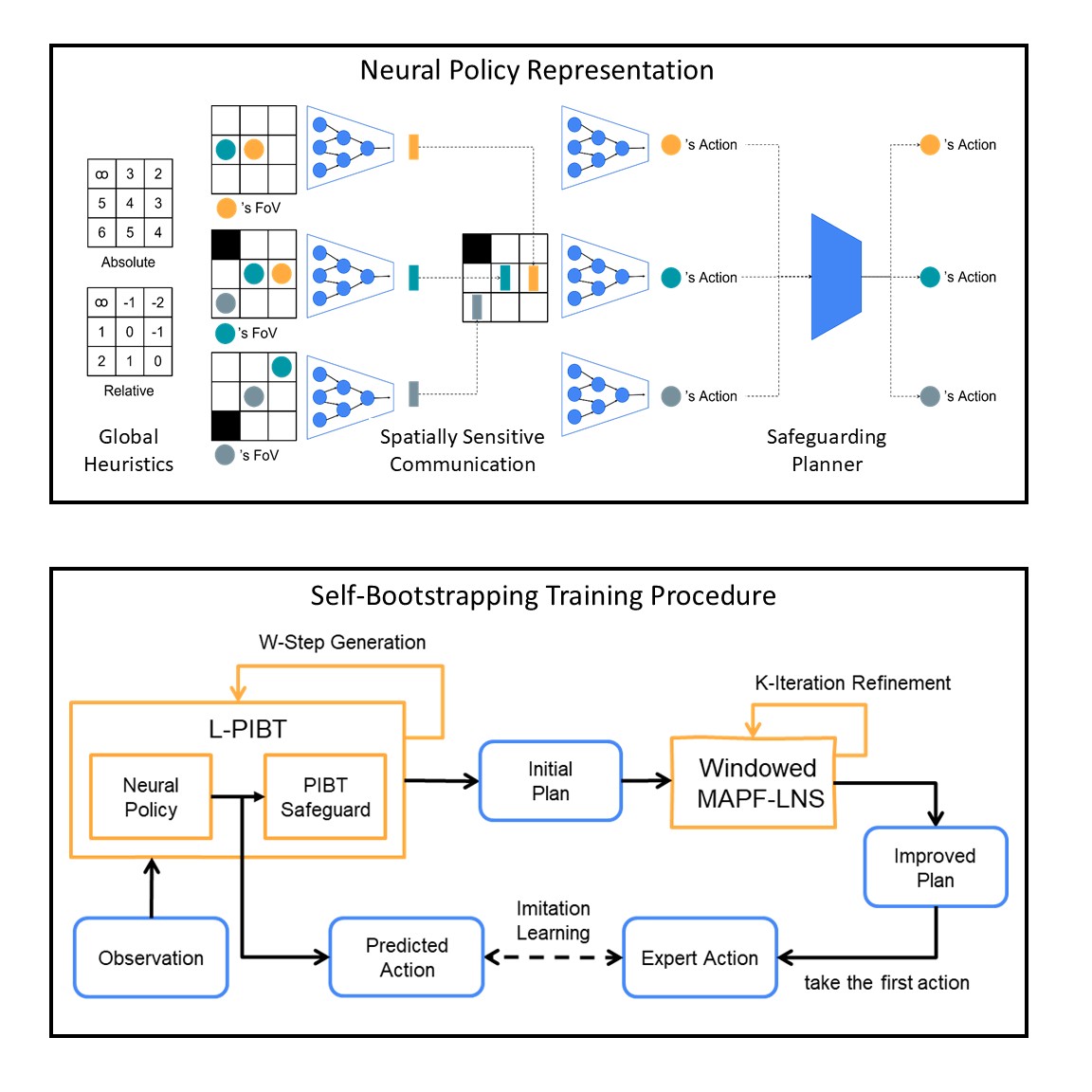

Abstract BibTeX arXiv Code ProjectLifelong Multi-Agent Path Finding (LMAPF) repeatedly finds collision-free paths for multiple agents that are continually assigned new goals when they reach current ones. Recently, this field has embraced learning-based methods, which reactively generate single-step actions based on individual local observations. However, it is still challenging for them to match the performance of the best search-based algorithms, especially in large-scale settings. This work proposes an imitation-learning-based LMAPF solver that introduces a novel communication module as well as systematic single-step collision resolution and global guidance techniques. Our proposed solver, Scalable Imitation Learning for LMAPF (SILLM), inherits the fast reasoning speed of learning-based methods and the high solution quality of search-based methods with the help of modern GPUs. Across six large-scale maps with up to 10,000 agents and varying obstacle structures, SILLM surpasses the best learning- and search-based baselines, achieving average throughput improvements of 137.7% and 16.0%, respectively. Furthermore, SILLM also beats the winning solution of the 2023 League of Robot Runners, an international LMAPF competition. Finally, we validated SILLM with 10 real robots and 100 virtual robots in a mock warehouse environment.

@inproceedings{ JiangICRA25,

author = "He Jiang and Yutong Wang and Rishi Veerapaneni and Tanishq Harish Duhan and Guillaume Adrien Sartoretti and Jiaoyang Li",

title = "Deploying Ten Thousand Robots: Scalable Imitation Learning for Lifelong Multi-Agent Path Finding",

booktitle = "Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)",

pages = "",

year = "2025",

doi = "",

}Guidance Graph Optimization for Lifelong Multi-Agent Path Finding.

Abstract BibTeX Publisher arXiv Code Slides PosterWe study how to use guidance to improve the throughput of lifelong Multi-Agent Path Finding (MAPF). Previous studies have demonstrated that, while incorporating guidance, such as highways, can accelerate MAPF algorithms, this often results in a trade-off with solution quality. In addition, how to generate good guidance automatically remains largely unexplored, with current methods falling short of surpassing manually designed ones. In this work, we introduce the guidance graph as a versatile representation of guidance for lifelong MAPF, framing Guidance Graph Optimization as the task of optimizing its edge weights. We present two GGO algorithms to automatically generate guidance for arbitrary lifelong MAPF algorithms and maps. The first method directly optimizes edge weights, while the second method optimizes an update model capable of generating edge weights. Empirically, we show that (1) our guidance graphs improve the throughput of three representative lifelong MAPF algorithms in eight benchmark maps, and (2) our update model can generate guidance graphs for as large as $93 \times 91$ maps and as many as 3,000 agents.

@inproceedings{ ZhangIJCAI24,

author = "Yulun Zhang and He Jiang and Varun Bhatt and Stefanos Nikolaidis and Jiaoyang Li",

title = "Guidance Graph Optimization for Lifelong Multi-Agent Path Finding",

booktitle = "Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI)",

pages = "311-320",

year = "2024",

doi = "10.24963/ijcai.2024/35",

}Scaling Lifelong Multi-Agent Path Finding to More Realistic Settings: Research Challenges and Opportunities. (Winner of 2023 League of Robot Runners)

Abstract BibTeX Publisher arXiv Code TalkMulti-Agent Path Finding (MAPF) is the problem of moving multiple agents from starts to goals without collisions. Lifelong MAPF (LMAPF) extends MAPF by continuously assigning new goals to agents. We present our winning approach to the 2023 League of Robot Runners LMAPF competition, which leads us to several interesting research challenges and future directions. In this paper, we outline three main research challenges. The first challenge is to search for high-quality LMAPF solutions within a limited planning time (e.g., 1s per step) for a large number of agents (e.g., 10,000) or extremely high agent density (e.g., 97.7%). We present future directions such as developing more competitive rule-based and anytime MAPF algorithms and parallelizing state-of-the-art MAPF algorithms. The second challenge is to alleviate congestion and the effect of myopic behaviors in LMAPF algorithms. We present future directions, such as developing moving guidance and traffic rules to reduce congestion, incorporating future prediction and real-time search, and determining the optimal agent number. The third challenge is to bridge the gaps between the LMAPF models used in the literature and real-world applications. We present future directions, such as dealing with more realistic kinodynamic models, execution uncertainty, and evolving systems.

@inproceedings{ JiangSoCS24,

author = "He Jiang and Yulun Zhang and Rishi Veerapaneni and Jiaoyang Li",

title = "Scaling Lifelong Multi-Agent Path Finding to More Realistic Settings: Research Challenges and Opportunities",

booktitle = "Proceedings of the Symposium on Combinatorial Search (SoCS)",

pages = "234-242",

year = "2024",

doi = "10.1609/socs.v17i1.31565",

}